500px技术周报005

概览

1、视频相关知识普及

2、当前比较流行的视频播放器介绍

3、猪厂网易新闻中的视频功能赏析

视频相关知识普及

流媒体服务

流媒体指以流方式在网络中传送音频、视频和多媒体文件的媒体形式。相对于下载后观看的网络播放形式而言,流媒体的典型特征是把连续的音频和视频信息压缩后放到网络服务器上,用户边下载边观看,而不必等待整个文件下载完毕。

直播和点播的区别

直播:地球一端在录制,另一端同时在另一端播放 例子:微吼保利威视直播网站 点播:先是将内容录制好,储存分发到云服务器,当用户需要观看时再从互联网中调用文件,进行播放。 例子:未来云保利威视石山视频

当前比较流行的视频播放器

FFmpeg

FFmpeg is a collection of libraries and tools to process multimedia content such as audio, video, subtitles and related metadata.

FFmpeg是能够处理音频、视频、字幕、及相关资源的工具和库的集合

Libraries

libavcodecprovides implementation of a wider range of codecs.

libavcodec 是一个包含音频/视频解码器和编码器的库。

libavformatimplements streaming protocols, container formats and basic I/O access.

libavformat 是一个包含了多媒体格式的分离器和混流器的库。

libavutilincludes hashers, decompressors and miscellaneous utility functions.

libavutil是一个包含简化编程功能的库,其中包括随机数生成器,数据结构,数学代码,核心多媒体工具等更多东西

libavfilterprovides a mean to alter decoded Audio and Video through chain of filters.

libavfilter提供对声音和视频的滤镜,比如对视频添加水印

libavdeviceprovides an abstraction to access capture and playback devices.

libavdevice是一个包含输入输出设备的库,用于捕捉和渲染很多来自常用的多媒体输入/输出软件框架的数据

libswresampleimplements audio mixing and resampling routines.

libswresample是一个用于执行高度优化的音频重采样,重新矩阵和取样格式转换操作的库。

libswscaleimplements color conversion and scaling routines.

libswscale是一个用于执行高度优化的图像缩放和颜色空间/像素格式转换操作的库。

Tools

- ffmpeg is a command line toolbox to manipulate, convert and stream multimedia content.

[ffmpeg]是命令行工具程序,比如ffmpeg -i input.mp4 output.avi

- ffplay is a minimalistic multimedia player.

[ffplay]播放器

- ffprobe is a simple analysis tool to inspect multimedia content.

ffprobe主要用来查看多媒体文件的信息

-

ffserver is a multimedia streaming server for live broadcasts.

ffserver可以用来搭建一个流媒体服务

-

Additional small tools such as

aviocat,ismindexandqt-faststart.

剩下都是一些小工具了

ijkplayer

https://github.com/Bilibili/ijkplayer哔哩哔哩公司出的开源播放器,基于ffmpeg.包含安卓和iOS版。

iOS自带播放器

iOS 9.0之前使用的是 MediaPlayer框架快速集成使用 MPMoviePlayerViewController

iOS 9.0之后使用的是AVKit框架 快速集成使用 AVPlayerViewController。

自定义使用 AVPlayer

猪厂网易新闻中的视频功能赏析

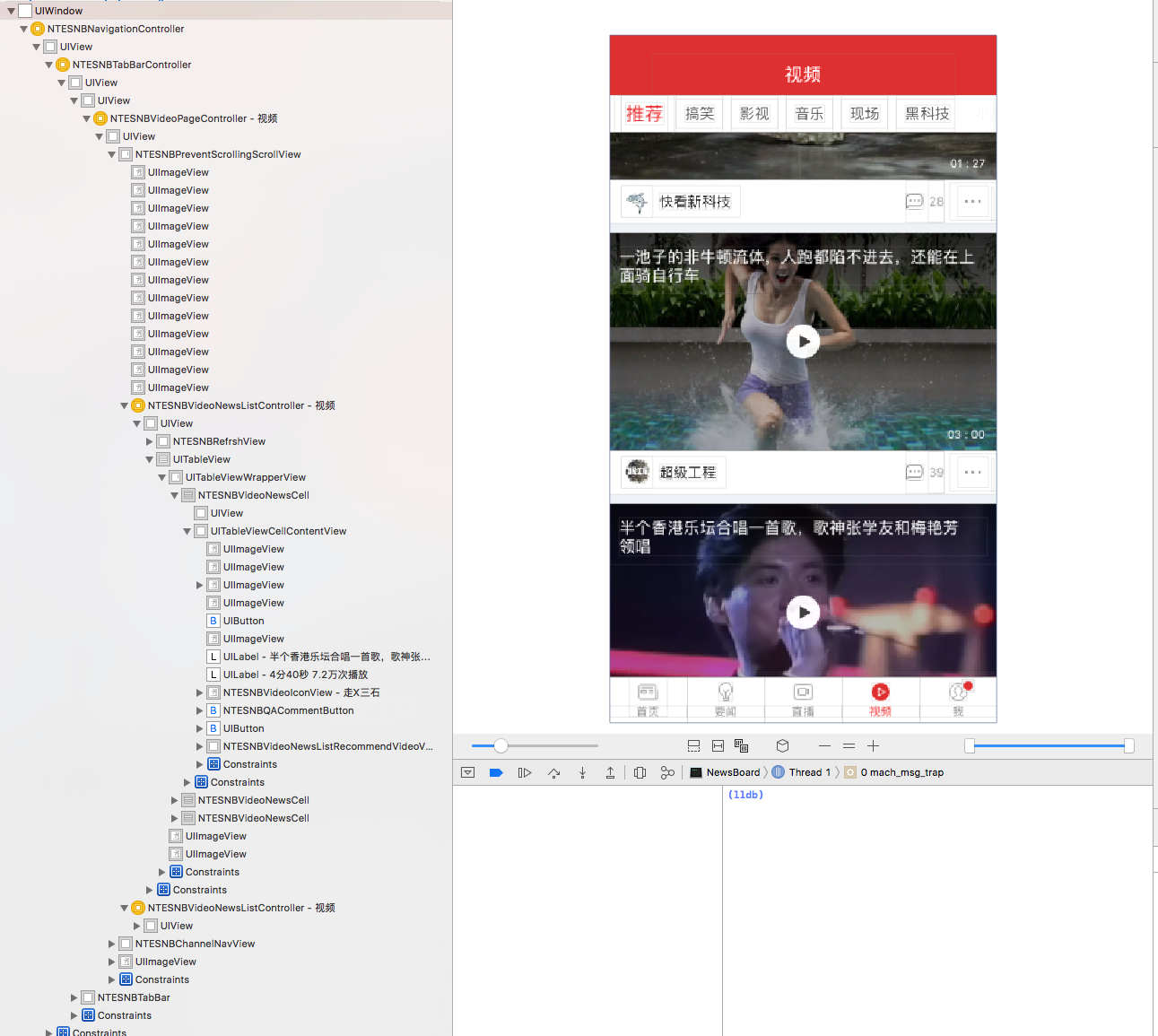

先来看看 未打开视频的状态

图中并没有看到视频播放器相关的控件

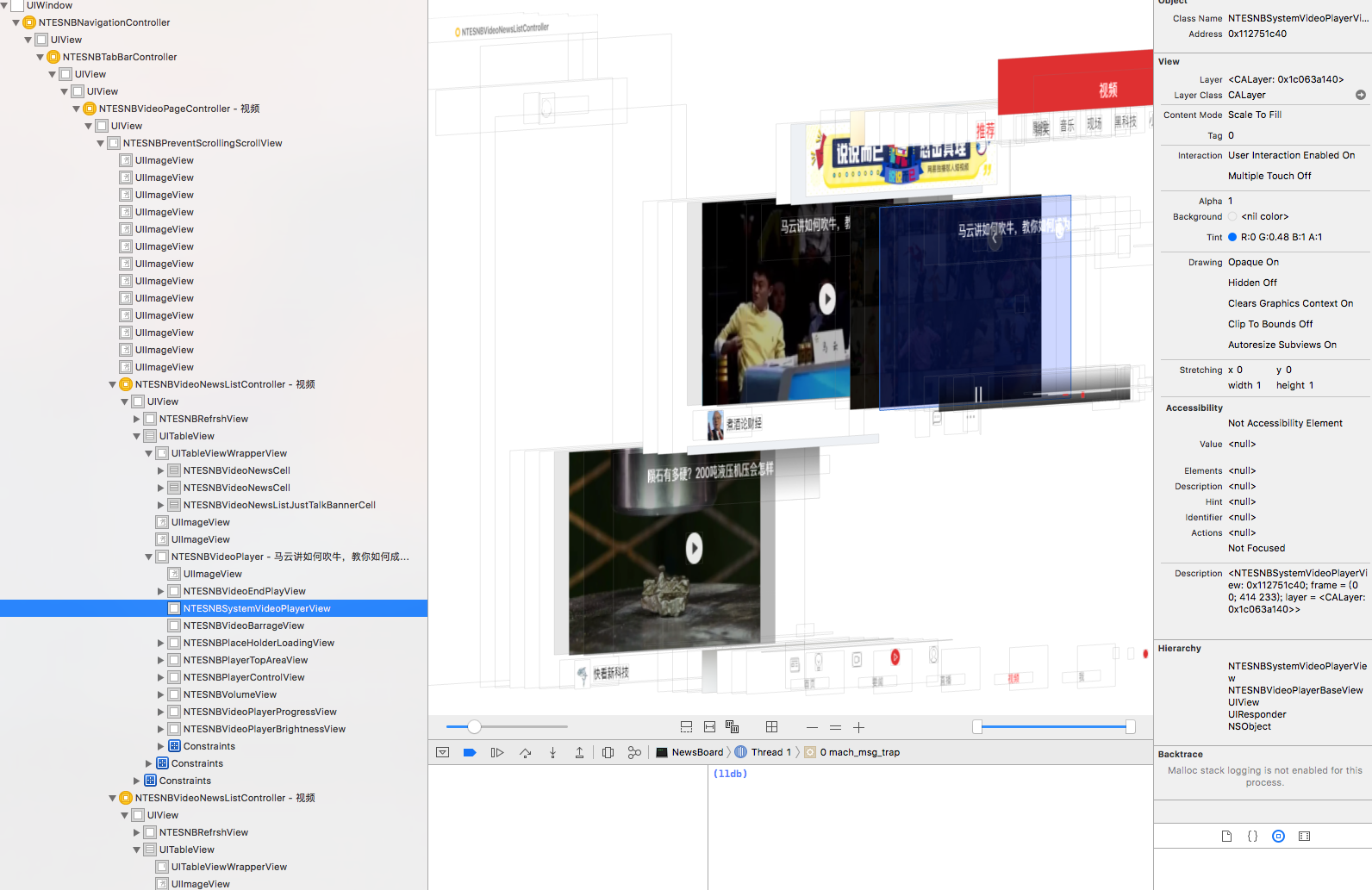

打开视频的状态

可以看到UITableView中有了视频播放控件NTESNBVideoPlayer,但是并没有在cell中,而是与cell平级。图中选中的是NTESNBSystemVideoPlayerView,它继承自NTESNBVideoPlayerBaseView

#import "NTESNBVideoPlayerBaseView.h"

@class AVPlayer, AVPlayerItem, AVPlayerLayer, NSError, NSURL;

@interface NTESNBSystemVideoPlayerView : NTESNBVideoPlayerBaseView

{

id _playProgressObserver;

_Bool _playable;

_Bool _muted;

_Bool _isStoped;

_Bool _isManualPause;

_Bool _isRecovering;

unsigned long long _loadStatus;

unsigned long long _playStatus;

unsigned long long _renderMode;

NSURL *_videoURL;

NSError *_error;

AVPlayerLayer *_playerLayer;

AVPlayerItem *_playerItem;

AVPlayer *_player;

double _recoverPlayTime;

}

@property(nonatomic) double recoverPlayTime; // @synthesize recoverPlayTime=_recoverPlayTime;

@property(nonatomic) _Bool isRecovering; // @synthesize isRecovering=_isRecovering;

@property(nonatomic) _Bool isManualPause; // @synthesize isManualPause=_isManualPause;

@property(nonatomic) _Bool isStoped; // @synthesize isStoped=_isStoped;

@property(retain, nonatomic) AVPlayer *player; // @synthesize player=_player;

@property(retain, nonatomic) AVPlayerItem *playerItem; // @synthesize playerItem=_playerItem;

@property(retain, nonatomic) AVPlayerLayer *playerLayer; // @synthesize playerLayer=_playerLayer;

@property(retain, nonatomic) NSError *error; // @synthesize error=_error;

@property(retain, nonatomic) NSURL *videoURL; // @synthesize videoURL=_videoURL;

- (_Bool)muted;

- (unsigned long long)renderMode;

@property(nonatomic) _Bool playable; // @synthesize playable=_playable;

@property(nonatomic) unsigned long long playStatus; // @synthesize playStatus=_playStatus;

@property(nonatomic) unsigned long long loadStatus; // @synthesize loadStatus=_loadStatus;

- (void).cxx_destruct;

- (void)updatePlayStatusIfNeeded:(unsigned long long)arg1;

- (void)layoutSubviews;

- (id)initWithFrame:(struct CGRect)arg1;

- (void)dealloc;

- (id)realPlayer;

- (double)bufferTime;

- (double)videoLength;

- (double)playTime;

- (void)setRenderMode:(unsigned long long)arg1;

- (void)setMuted:(_Bool)arg1;

- (void)observeValueForKeyPath:(id)arg1 ofObject:(id)arg2 change:(id)arg3 context:(void *)arg4;

- (_Bool)isCurrentItem:(id)arg1;

- (void)playerItemPlaybackStalled:(id)arg1;

- (void)playerItemNewAccessLogEntry:(id)arg1;

- (void)playerItemDidReachEnd:(id)arg1;

- (void)removeObserverForPlayer:(id)arg1;

- (void)addObserverToPlayer:(id)arg1;

- (void)removePlayProgressObserver;

- (void)setupPlayerItemWith:(id)arg1;

- (void)prepareToPlayAsset:(id)arg1 withKeys:(id)arg2 complete:(CDUnknownBlockType)arg3;

- (void)setUpPlayer;

- (void)seekToTime:(double)arg1 complete:(CDUnknownBlockType)arg2;

- (void)stopUpdatePlayTime;

- (void)startUpdatePlayTime;

- (_Bool)isPausing;

- (_Bool)isPlaying;

- (void)resume;

- (void)pause;

- (void)resetRecoverStatus;

- (_Bool)recover;

- (void)stop;

- (void)loadVideoInfoWithUrl:(id)arg1 complete:(CDUnknownBlockType)arg2;

- (void)play;

- (_Bool)isSupportAirPlay;

@end#import <UIKit/UIView.h>

#import "NTESNBVideoPlayerProtocol-Protocol.h"

@class NSError, NSMutableDictionary, NSString, NSURL;

@protocol NTESNBVideoPlayCallback;

@interface NTESNBVideoPlayerBaseView : UIView <NTESNBVideoPlayerProtocol>

{

_Bool _isLive;

_Bool _muted;

_Bool _autoUpdateLiveStatus;

id <NTESNBVideoPlayCallback> _delegate;

unsigned long long _renderMode;

unsigned long long _bufferStrategy;

}

+ (id)nePlayerWithframe:(struct CGRect)arg1;

+ (id)playerWithType:(unsigned long long)arg1 frame:(struct CGRect)arg2;

@property(nonatomic) unsigned long long bufferStrategy; // @synthesize bufferStrategy=_bufferStrategy;

@property(nonatomic) _Bool autoUpdateLiveStatus; // @synthesize autoUpdateLiveStatus=_autoUpdateLiveStatus;

@property(nonatomic) unsigned long long renderMode; // @synthesize renderMode=_renderMode;

@property(nonatomic) _Bool muted; // @synthesize muted=_muted;

@property(nonatomic) _Bool isLive; // @synthesize isLive=_isLive;

@property(nonatomic) __weak id <NTESNBVideoPlayCallback> delegate; // @synthesize delegate=_delegate;

- (void).cxx_destruct;

- (void)seekToTime:(double)arg1 complete:(CDUnknownBlockType)arg2;

- (void)noticeVideoPlayerOfOrientation:(long long)arg1;

- (void)stopUpdatePlayTime;

- (void)startUpdatePlayTime;

@property(readonly, nonatomic) id realPlayer;

- (_Bool)isPausing;

- (_Bool)isPlaying;

- (void)resume;

- (void)pause;

- (_Bool)recover;

- (void)stop;

- (void)loadVideoInfoWithUrl:(id)arg1 complete:(CDUnknownBlockType)arg2;

- (void)play;

- (_Bool)isSupportVideoQualityReport;

- (_Bool)isSupportAirPlay;

@property(nonatomic) unsigned long long playStatus; // @dynamic playStatus;

@property(nonatomic) unsigned long long loadStatus; // @dynamic loadStatus;

@property(readonly, nonatomic) double playTime; // @dynamic playTime;

- (id)initWithFrame:(struct CGRect)arg1;

- (id)init;

- (void)dealloc;

- (void)clearStalledTimes;

- (void)didLoadStalled;

- (void)didPresentFirstPicture;

- (void)didStartLoad;

- (void)storeValue:(id)arg1 forKey:(id)arg2;

- (void)resetStatistics;

- (void)updateLiveStatus:(_Bool)arg1;

- (void)updatePlayTime;

- (void)updatePlayStatus:(unsigned long long)arg1;

- (void)updateLoadStatus:(unsigned long long)arg1;

- (void)noticeOfFirstPicPresent:(double)arg1;

@property(nonatomic) long long stalledTimes;

@property(nonatomic) double startLoadTimeStamp;

@property(retain, nonatomic) NSMutableDictionary *statisticsDic;

@property(retain, nonatomic) NSURL *videoURL;

// Remaining properties

@property(readonly, nonatomic) double bufferTime; // @dynamic bufferTime;

@property(readonly, copy) NSString *debugDescription;

@property(readonly, copy) NSString *description;

@property(readonly, nonatomic) NSError *error; // @dynamic error;

@property(readonly) unsigned long long hash;

@property(nonatomic) _Bool playable; // @dynamic playable;

@property(readonly) Class superclass;

@property(readonly, nonatomic) double videoLength; // @dynamic videoLength;

@end#import "NSObject-Protocol.h"

@class NTESNBVideoPlayerBaseView;

@protocol NTESNBVideoPlayCallback <NSObject>

@optional

- (void)playerView:(NTESNBVideoPlayerBaseView *)arg1 didPresentFirstPicWithLoadTime:(double)arg2;

- (void)playerView:(NTESNBVideoPlayerBaseView *)arg1 liveStatusChangeTo:(_Bool)arg2;

- (void)playerView:(NTESNBVideoPlayerBaseView *)arg1 playAtTime:(double)arg2;

- (void)playerView:(NTESNBVideoPlayerBaseView *)arg1 controlStatusChangeTo:(unsigned long long)arg2;

- (void)playerView:(NTESNBVideoPlayerBaseView *)arg1 loadStatusChangeTo:(unsigned long long)arg2;

@end#import "NSObject-Protocol.h"

@class NSDictionary, NSError, NSURL;

@protocol NTESNBVideoPlayCallback;

@protocol NTESNBVideoPlayerProtocol <NSObject>

@property(readonly, nonatomic) NSDictionary *statisticsDic;

@property(readonly, nonatomic) NSError *error;

@property(readonly, nonatomic) NSURL *videoURL;

@property(readonly, nonatomic) id realPlayer;

@property(readonly, nonatomic) _Bool playable;

@property(readonly, nonatomic) unsigned long long playStatus;

@property(readonly, nonatomic) unsigned long long loadStatus;

@property(readonly, nonatomic) double bufferTime;

@property(readonly, nonatomic) double playTime;

@property(readonly, nonatomic) double videoLength;

@property(nonatomic) unsigned long long bufferStrategy;

@property(nonatomic) _Bool autoUpdateLiveStatus;

@property(nonatomic) _Bool isLive;

@property(nonatomic) _Bool muted;

@property(nonatomic) unsigned long long renderMode;

@property(nonatomic) __weak id <NTESNBVideoPlayCallback> delegate;

- (void)seekToTime:(double)arg1 complete:(void (^)(void))arg2;

- (void)noticeVideoPlayerOfOrientation:(long long)arg1;

- (void)stopUpdatePlayTime;

- (void)startUpdatePlayTime;

- (_Bool)isPausing;

- (_Bool)isPlaying;

- (void)resume;

- (void)pause;

- (_Bool)recover;

- (void)stop;

- (void)loadVideoInfoWithUrl:(NSURL *)arg1 complete:(void (^)(void))arg2;

- (void)play;

- (_Bool)isSupportVideoQualityReport;

- (_Bool)isSupportAirPlay;

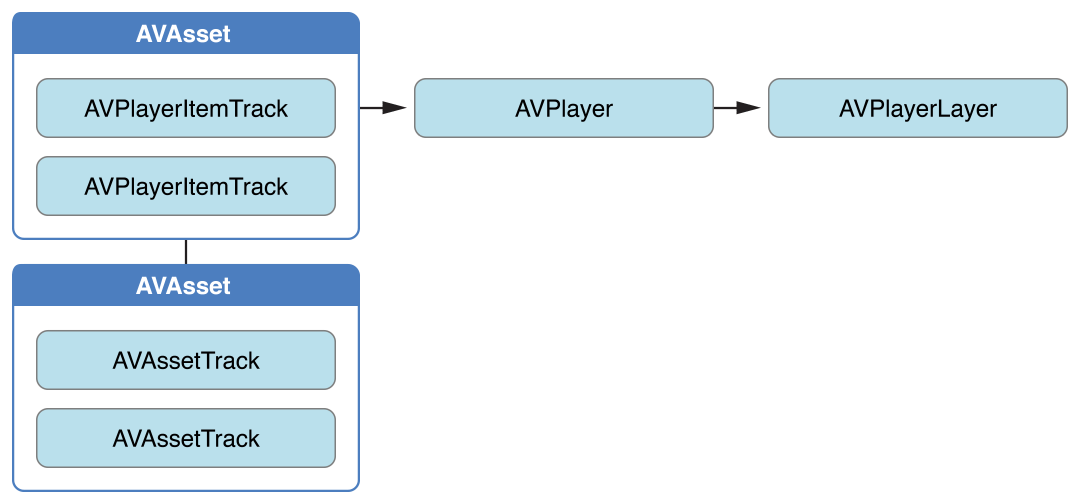

@end由上面的NTESNBSystemVideoPlayerView,可以看到有AVPlayer、AVPlayerItem、AVPlayerLayer三种类型的变量,这三的关系如下图

使用如下:

AVPlayerItem *item = [[AVPlayerItem alloc] initWithURL:url];

AVPlayer* player = [AVPlayer playerWithPlayerItem:item];

AVPlayerLayer *playerLayer = [AVPlayerLayer playerLayerWithPlayer:player];

playerLayer.videoGravity = AVLayerVideoGravityResizeAspect;

[self.layer addSublayer:playerLayer];AVPlayerItem

playerItem是管理资源的对象,管理着时间和资源的状态等(AVPlayerItem models the timing and presentation state of an asset played by an AVPlayer object. It provides the interface to seek to various times in the media, determine its presentation size, identify its current time, and much more.)

客户端监听playerItem的status和loadedTimeRange属性,

typedef NS_ENUM(NSInteger, AVPlayerItemStatus) {

AVPlayerItemStatusUnknown,

AVPlayerItemStatusReadyToPlay,

AVPlayerItemStatusFailed

};AVPlayerItemStatusReadyToPlay代表可以播放,可以通过CMTimeGetSeconds(currentItem.duration)来获取视频的总时长(单位:秒),AVPlayerItemStatusFailed代表不可以播放。

监测loadedTimeRanges可以获取到当前视频已经缓冲了多少秒。

NSArray *loadedTimeRanges = [currentItem loadedTimeRanges];

CMTimeRange timeRange = [loadedTimeRanges.firstObject CMTimeRangeValue];// 获取缓冲区域

float startSeconds = CMTimeGetSeconds(timeRange.start);

float durationSeconds = CMTimeGetSeconds(timeRange.duration);

NSTimeInterval result = startSeconds + durationSeconds;// 计算缓冲总进度下面的代码可以实现实时更新播放的进度条的进度和文字中时间

- (void)addVideoTimerObserver {

__weak typeof (self)self_ = self;

_timeObser = [_player addPeriodicTimeObserverForInterval:CMTimeMake(1, 1) queue:NULL usingBlock:^(CMTime time) {

float currentTimeValue = time.value*1.0/time.timescale/videoLength;//进度条需要的进度

NSString *currentString = [self_ getStringFromCMTime:time];

if ([self.someDelegate respondsToSelector:@selector(flushCurrentTime:sliderValue:)]) {

//掉用代理的方法更新进度条的进度及文字

} else {

NSLog(@"no response");

}

}];

}

- (NSString *)getStringFromCMTime:(CMTime)time

{

float currentTimeValue = (CGFloat)time.value/time.timescale;//得到当前的播放时

NSDate * currentDate = [NSDate dateWithTimeIntervalSince1970:currentTimeValue];

NSCalendar *calendar = [[NSCalendar alloc] initWithCalendarIdentifier:NSCalendarIdentifierGregorian];

NSInteger unitFlags = NSCalendarUnitHour | NSCalendarUnitMinute | NSCalendarUnitSecond ;

NSDateComponents *components = [calendar components:unitFlags fromDate:currentDate];

if (currentTimeValue >= 3600 )

{

return [NSString stringWithFormat:@"%ld:%ld:%ld",components.hour,components.minute,components.second];

}

else

{

return [NSString stringWithFormat:@"%ld:%ld",components.minute,components.second];

}

}全屏视频的状态

由上面可以看到从tableview里拿出来放到了VC的view里